Ohm Sweet Ohm

Computer vision app that calculates carbon emissions

Challenge

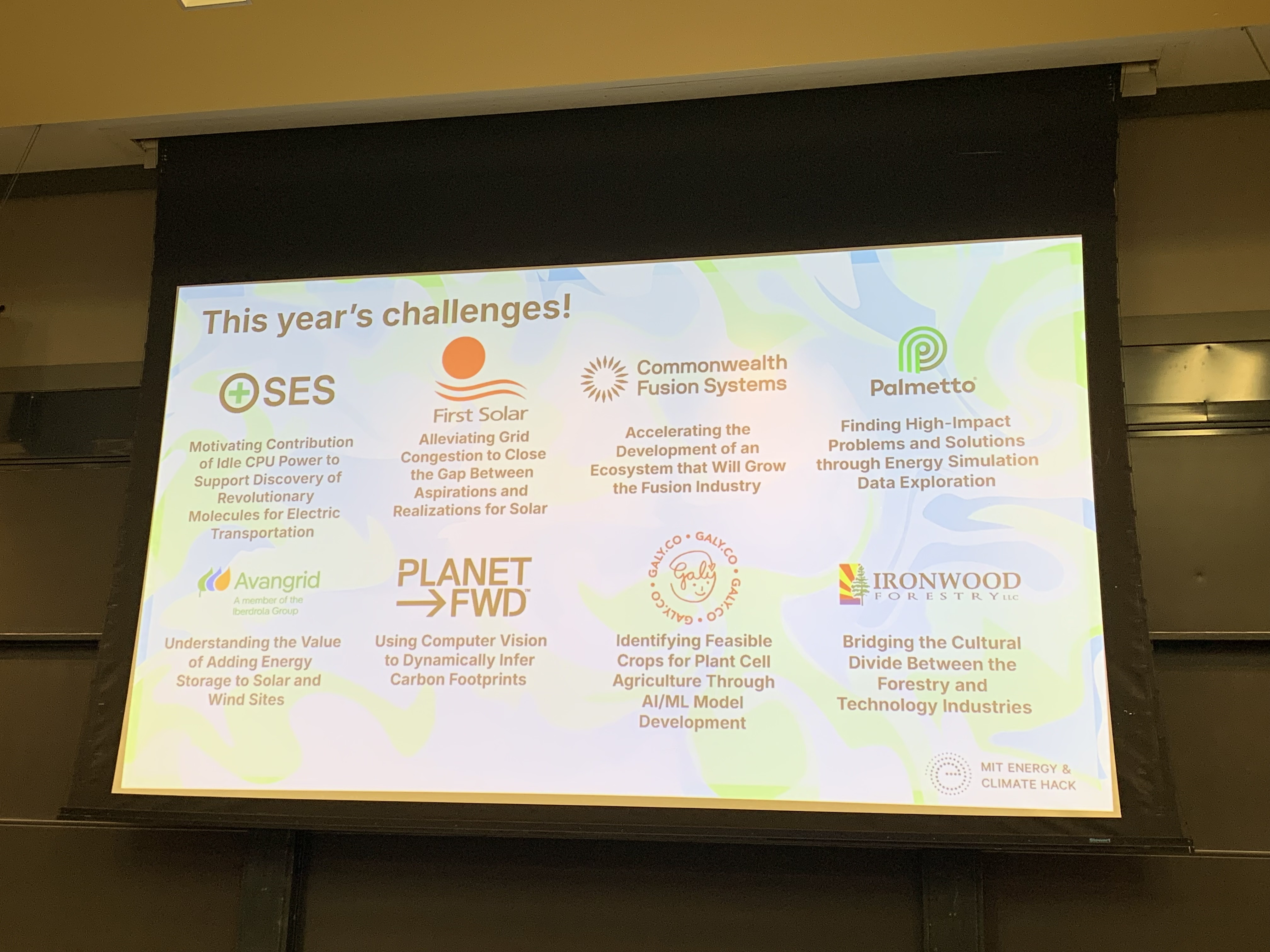

In November 2024, I participated in an Energy and Climate Hackathon run by MIT. There were 8 challenges presented by different companies and startups, and my team participated in the challenge run by Planet FWD.

Planet FWD is a decarbonization platform that helps companies measure, report, and reduce their carbon footprints across their products. Their challenge was to “Use computer vision to dynamically infer carbon footprints.” We would be using their brand-new API that took a product’s name and mass as input, and output an estimate of that product’s CO2 emissions.

Picking the computer vision model

Our first decision was to pick what computer vision model to use for our application. I evaluated a number of pre-trained open-source computer vision models including:

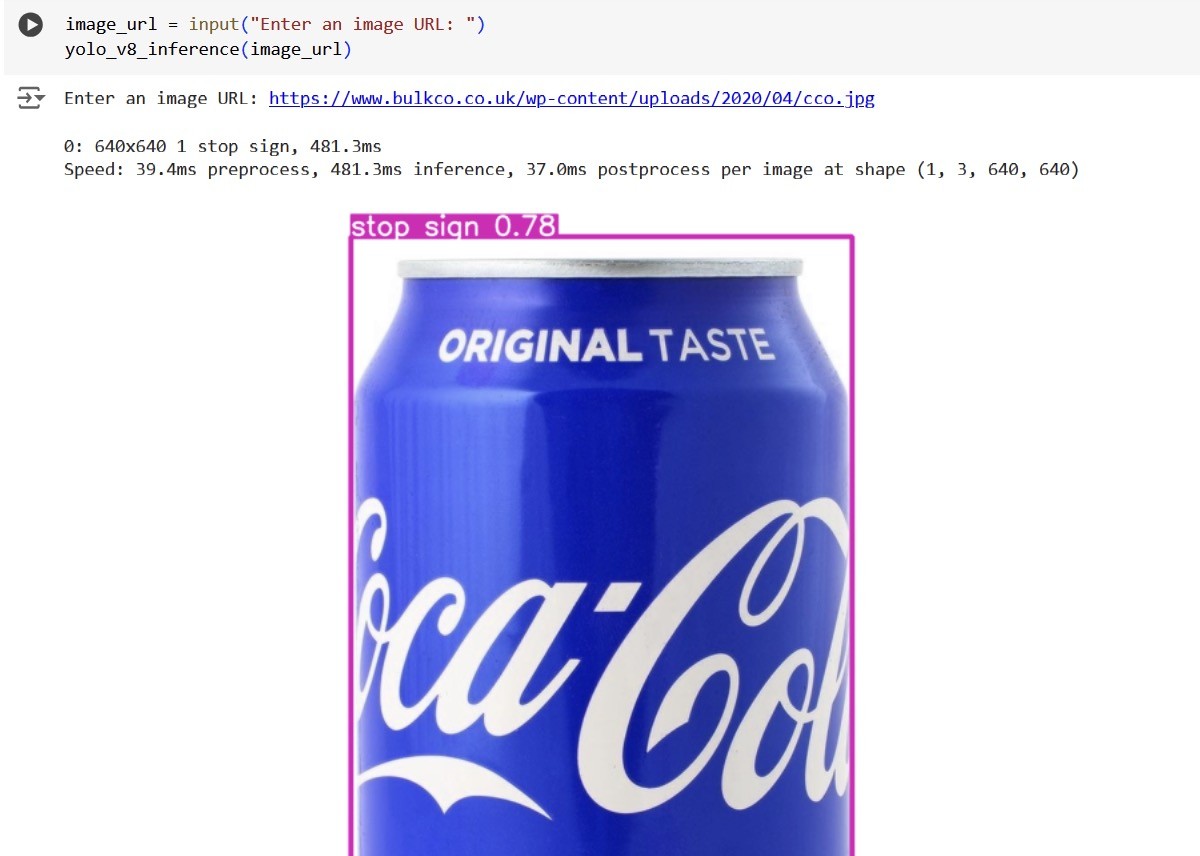

Unfortunately, none of the open-source models worked that well with the types of objects we wanted to identify (food):

To correctly identify retail and food objects, we would have to fine-tune the open-source computer vision models with retail data images, and unfortunately we didn’t have the time or compute to do so (we only had 24 hours for this hackathon.)

Instead, I decided to cough up $5 to use OpenAI’s GPT-4o-mini model. It worked really well at both identifying food/retail objects, as well as estimating the mass of the object, both parameters we needed to use Planet FWD’s API for estimating carbon emissions.

Model pipeline

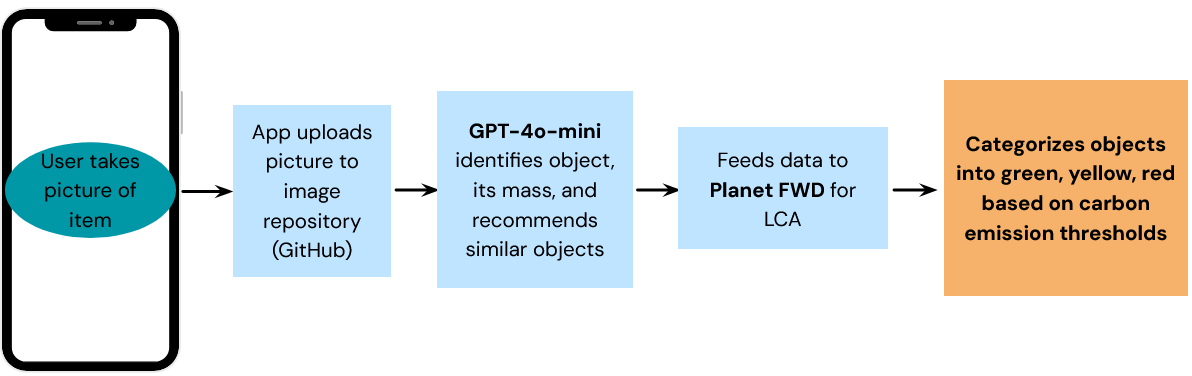

Here was the final model pipeline for our app:

App

Here’s the app my team and I managed to finish at 1AM in the morning:

Ultimately we did not win, but we had a lot of fun! 🎉